Metrics for object detection. Although on-line competitions use their own metrics to evaluate the task of object detection, just some of them offer reference code snippets to.

State-of-the-art methods are expected to publishusing AP, mainly. Average Precision (AP) and mean Average Precision (mAP ) are the most popular metrics used to evaluate object detection models such as Faster R_CNN, Mask R-CNN, YOLO among others. This metric is used in most state of art object detection algorithms.

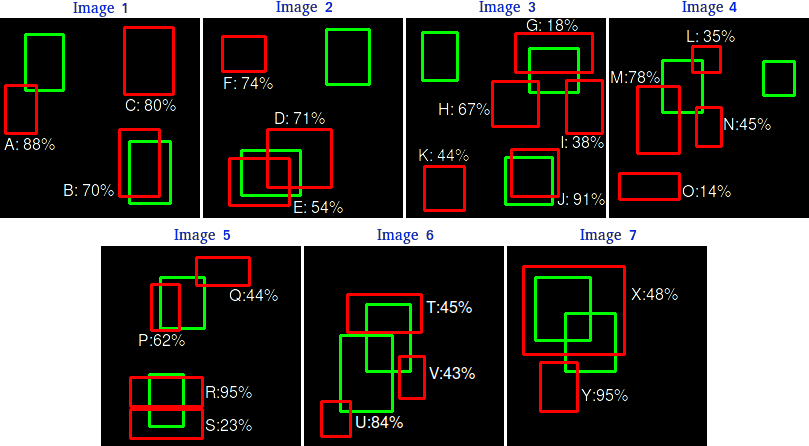

In object detection, the model predicts multiple bounding boxes for each object, and based on the confidence scores of each bounding box it removes unnecessary boxes based on its threshold value. We need to declare the threshold value based on our requirements. Average precision (AP ), for instance, is a popular metric for evaluating the accuracy of object detectors by estimating the area under the curve (AUC) of the precision × recall relationship.

These competition datasets have pretty stringent object detection evaluation metrics. In this article, we will learn about the evaluation metrics that are commonly used in object detection. Object detection metrics serve as a measure to assess how well the model performs on an object detection task.

If you are working on an object detection or instance segmentation algorithm, you have probably come across the messy pile of different kinds of performance metrics. There are AP, AP, AP, mAP.

Set IoU threshold value to 0. It can be set to 0. Average precision (AP), for instance, is a popular metric for evaluating the accuracy of object detectors by estimating the area under the curve (AUC) of the precision × recall relationship. This script calculates the standard object detection metrics, using the ground truth tags provided by the human expert as well as the predictions made by the model.

Object Detection comprises two principle aims: finding object locations and recognise the object category (dog or cat), summarised as object localization and classification. Localisation tells the objects from the background while classification tells one object from others with different categories.

As a consequence, the participants are expected to produce a detection for each of the relevant classes, even if each detection corresponds to the same object instance. So I did all this stuff to get things up and running with the object detection api and trained on some images and did some eval on other images. So I decided to use the weighted PASCAL metrics set for evaluation: And in tensorboard I get some IoU for every class and also mAP and thats fine to see and now comes the questions.

Published Date: 27. Original article was published by Kiprono Elijah Koech on Artificial Intelligence on Medium.

To use the COCO object detection metrics add metrics _set: "coco_ detection _ metrics " to the eval_config message in the config file. To use the COCO instance segmentation metrics add metrics _set: "coco_mask_ metrics " to the eval_config message in the config file. How does one navigate the maze of competing objectives?

Continue reading on Medium » Related Articles. Admen DIDN’T Rebrand Data as Big Data. Tests and comparisons are performed between common object detection networks without multi-scaled deformable convolutional networks and the new object detection networks with multi-scaled deformable convolutional networks.

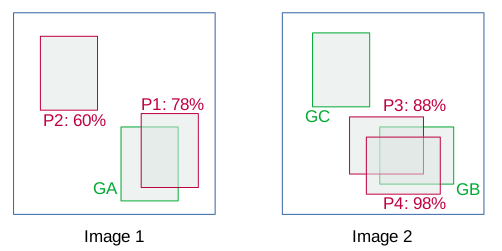

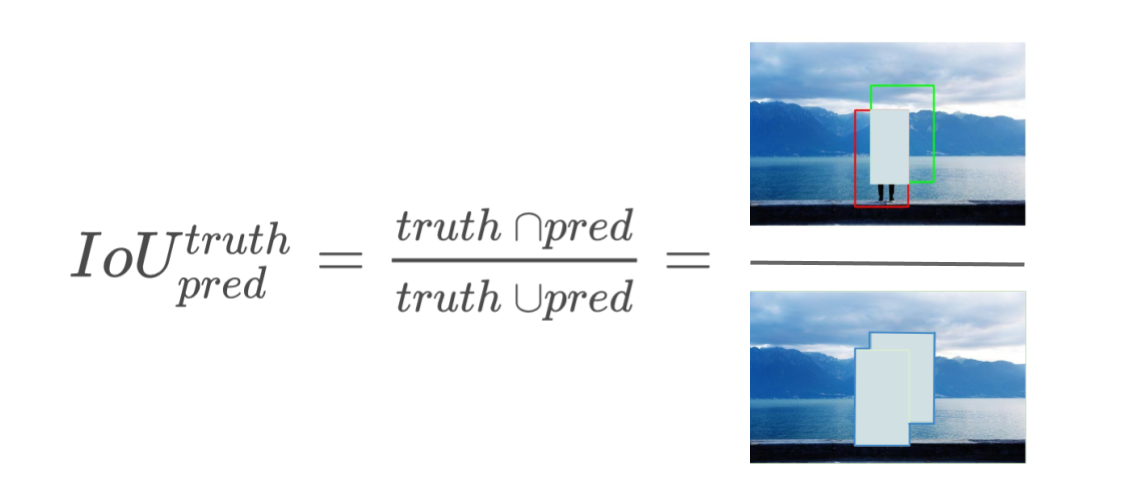

Figure 9: Measuring object detection performance using Intersection over Union. Notice how the predicted bounding box nearly perfectly overlaps with the ground-truth bounding box. Recall values for IOUs of 0. Here is one final example of computing Intersection over Union: Figure 10: Intersection over Union for evaluating object detection algorithms. The authors also present.

In this case, the simulated radar sensors have a high enough resolution to generate multiple detections per object. If these detections are not. Object locations and scores, specified as a two-column table containing the bounding boxes and scores for each detected object.

For multiclass detection, a third column contains the predicted label for each detection. Why do we use the Fscore instead of mutual information? On which data evaluate an object detection model ? Creating a custom dataset for object detection. To show you how the single class object detection feature works, let us create a custom model to detect pizzas.

In this section, we will review the history of object detection in multiple aspects, including milestone detectors, object detection datasets, metrics, and the evolution of key tech-niques. With this new feature, we don’t need to.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.