Label ranking average precision (LRAP) is the average over each ground truth label assigned to each sample, of the ratio of true vs. This metric is used in multilabel ranking problem, where the goal is to give better rank to the labels associated to each sample.

I am calculating mean average precision at top k retrieve objects. The code read the two lists from csv files, then take a sample from a list, compute euclidean distance with all samples from other list, sort them and finally take top k objects to see if the object is available in the retrieved samples.

How does sklearn compute the. Can any sklearn module return average. How to interpret: Label Ranking Average. This metric is linked to the average _ precision _score function, but is based on the notion of label ranking instead of precision and recall.

Python开源项目中,提取了以下50个代码示例,用于说明如何使用 sklearn. Average precision is calculated for each object.

From the above formula, P refers to precision and R refers to Recall suffix n denotes the different threshold values. Is there any (open source) reliable implementation ? From the function documentation, the average precision “summarizes a precision-recall curve as the weighted mean of precisions achieved at each threshol with the increase in recall from the previous threshold used as the weight. This is the average of the precision obtained every time a new positive sample is recalled.

It is the same as the AUC if precision is interpolated by constant segments and is the definition used by TREC most often. Here are the examples of the python api sklearn.

By voting up you can indicate which examples are most useful and appropriate. Just take the average of the precision and recall of the system on different sets.

Here is a script to show the result: from sklearn. In binary classification settings Create simple data. Try to differentiate th. The average precision is then reported as somewhere around 0. In order to create a confusion matrix having numbers across all the cells, only one feature is used for training the model.

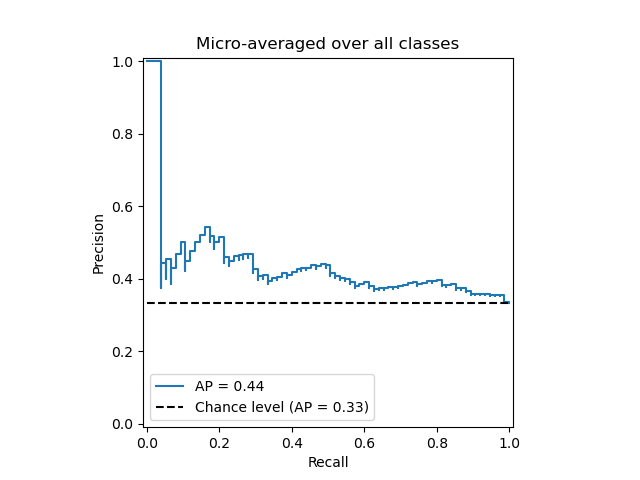

Pay attention to the training data X. The Micro- average F-Score will be simply the harmonic mean of these two figures. Macro- average Method The method is straight forward.

For example, the macro- average precision. The following are code examples for showing how to use sklearn. These examples are extracted from open source projects.

I need to return the weighted average precision of y test and predictions in sklearn, as a float. Python, I calculated average precision (with average _ precision _score) and precision (with classification_report) while testing model metrics.

However, I got different answers (vsrespectively). I read the documentation for both and know the equations are different, but I was hoping to get an intuitive explanation about the differences between the two and. This score corresponds to the area under the precision -recall curve.

EpochMetric def average _ precision _compute_fn(y_preds, y_targets, activation=None): try: from sklearn. Parameters for sklearn average precision score when using Random Forest. Ask Question Asked months ago.

Active month ago. I have been trying to fiddle with sklearn metrics, particularly average _ precision _score. The precision is intuitively the ability of the classifier not to label.

They are from open source Python projects. The micro average option seems to result in mathematically equivalent definitions for precision _score and recall_score (and as a result equivalent to the f1_score, and fbeta_score).

Find micro average fscore, macro average fscore and weighted average fscore using Sklearn 04:Create tabular format (dataframe) with Precision, Recall and FScore 05:Set up.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.