Mean average precision for a set of queries is the mean of the average precision scores for each query. The precision is intuitively the ability of the classifier not to label as positive a sample that is negative.

You can also think of PR AUC as the average of precision scores calculated for each recall threshold. Micro Average vs Macro average. Scikit-learn average_precision_score(). How to interpret classification report of.

However, it is quite tricky to interpret and compare the scores that are seen. In a classification task, a precision score of 1. C means that every item labeled as belonging to class C does indeed belong to class C (but says nothing about the number of items from class C that were not labeled correctly) whereas a recall of 1. C was labeled as belonging to class C (but says nothing about how many items from other classes were incorrectly also labeled as belonging to class C).

Just take the average of the precision and recall of the system on different sets. Label Ranking average precision (LRAP) measures the average precision of the predictive model but instead using precision -recall. It measures the label rankings of each sample.

Its value is always greater than 0. The best value of this metric is 1. We could just average all of the final class scores, but this would dilute scores of common classes and boost less frequent classes. Instea we can simply use a weighted- average ! Using the two example images, we had Cats, Dog, and Bird.

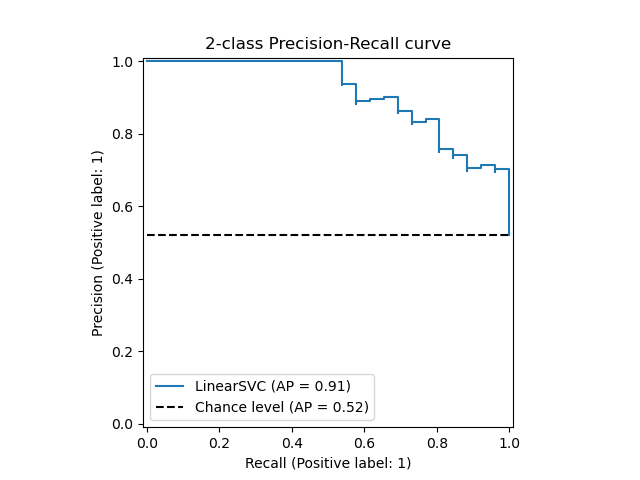

Conveniently, this ends up being the same as averaging all. For example, if you count any model output less than 0. But sometimes (especially if your classes are not balance or if you want to favor precision over recall or vice versa), you may want to vary this threshold. Precision refers to precision at a particular decision threshold. Rather than comparing curves, its sometimes useful to have a single number that characterizes the performance of a classifier.

A common metric is the average precision. This can actually mean one of several things. Strictly, the average precision is precision averaged across all values of recall between 0. The Macro- average F- Score will be simply the harmonic mean of these two figures. The method is straight forward.

Hence, from Imagewe can see that it is useful for evaluating Localisation models, Object Detection Models and Segmentation models. From the function documentation, the average precision “summarizes a precision -recall curve as the weighted mean of precisions achieved at each threshol with the increase in recall from the previous threshold used as the weight.

This implementation is not interpolated and is different from outputting the area under the precision -recall curve with the trapezoidal rule, which uses. Here’s another way to understand average precision. says AP is used to score document retrieval.

Average precision computes the average precision value for recall value over 0. It’s probably best if all of them were relevant. If only some are relevant, say five of them, then it’s much better if the relevant ones are shown first. It would be bad if first five were irrelevant and good ones only started from sixth, wouldn’t it? Description The average_precision_score () function in sklearn doesn't return a correct AUC value.

AP score reflects this. So the average precision score gives you the average precision of all the different threshold choices. I am trying to calculate the cross-validated precision score for my multi-class classification model.

To do this I used make_scorer to set the average to weighted. In practice, a higher mAP value indicates a better performance of your neural net, given your ground-truth and set of classes.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.