The compile () method takes a metrics argument, which is a list of metrics: model. MeanSquaredError (), metrics. They are also returned by model. Sequential() model.

Dense( activation="sigmoid", input_dim=2)) model. Calculate recall for the first label. This metric creates two local variables, total and count that are used to compute the frequency with which y_pred matches y_true.

This frequency is ultimately returned as binary accuracy: an idempotent operation that simply divides total by count. This is the crossentropy metric class to be used when there are only two label classes (and 1).

Choosing a good metric for your problem is usually a difficult task. AUC: Computes the approximate AUC (Area under the curve) via a Riemann sum.

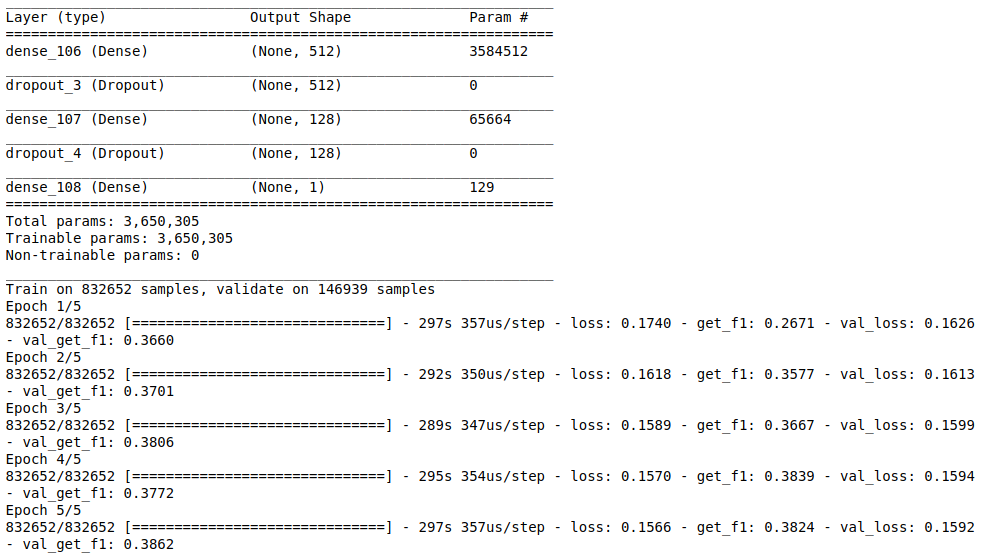

BinaryCrossentropy: Computes the crossentropy metric between the. You can provide logits of classes as y_pre since argmax of logits and probabilities are same. Fis an important metrics to monitor training of your model. Fmetrics for models to be shown with every training epoch.

If sample_weight is given, calculates the sum of the weights of true negatives. Including from tensorflow. Metric as suggested in this answer does not change anything. No, they are all different things used for different purposes in your code.

There are two parts in your code. For example: model. However most of what‘s written. While there are lot of examples online. View realtime plot of training metrics (by epoch). Use the global keras. Float between and 1. Fraction of the training data to be used as validation data. The model will set apart this fraction of the training data, will not train on it, and will evaluate the. It offers five different accuracy metrics for evaluating classifiers.

This article attempts to explain these metrics at a fundamental level by exploring their components and calculations with experimentation. You can plot the training metrics by epoch using the plot() method. Summary Add Precision, Recall, MeanIoU metrics.

Metrics 在 keras 中操作的均为 Tensor 对象,因此,需要定义操作 Tensor 的函数来操作所有输出结果,定义好函数之后,直接将其放在 model. Let’s take a look at those. AUC computes the approximate AUC (Area under the curve) for ROC curve via the Riemann sum.

Classification Metrics. This way in the history of the model you will find both the metrics indexed as “average_precision_at_k_1” and “average_precision. In “micro averaging”, we’d calculate the performance, e. Elle a été développée avec pour objectif de permettre des expérimentations rapides.

An alternative way would be to split your dataset in training and test and use the test part to predict the. Then since you know the real labels, calculate precision and recall manually. SG RMSprop sgd=SGD(lr=) model.

Stateful という名のとおり、tensorflow.

Aucun commentaire:

Enregistrer un commentaire

Remarque : Seul un membre de ce blog est autorisé à enregistrer un commentaire.